I was in through various aspects of google technology for past 1 month and i thought it is good to alert you to peek into the underlying unique technology of the Internet Search engine giant.Unlike common high traffic servers' architecture, the Google cluster architecture is based on strong software and lots of PC's. Some say more then 15,000 PC's are taking part of Google's phenomenon.

I am referring the Quazen web article which is a fine imprint of the technology.Let's take a look at this wonderful search engine intestine.Google's architecture provides reliability in the Google's servers and Pc's environment at the software level, by replicating services across many different machines. Google is also proud of its own failures detecting mechanism which handles different threats and malfunctioning in its web .

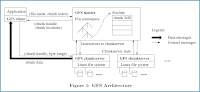

The mechanism : When a user enters a query to Google the user’s browser first performs a domain name system (DNS) lookup to map www.google.com to a particular IP address. To provide sufficient capacity to handle query traffic, the Google service is being spread to multiple clusters distributed worldwide.

Each cluster has around a few thousand machines, and the geographically distributed setup protects Google against disaster at the data centers (like those arising from earthquakes and large scale power failures).

A DNS-based load-balancing system selects a cluster by the user’s geographic location to each physical cluster. The load-balancing system minimizes back and forward trips for the user's request.

The user’s browser then sends HTTP request to one of these clusters, and thereafter, the processing of that query is entirely local to that cluster. A hardware-based load balancer in each cluster monitors the available set of Google Web servers (GWSs) and performs local load balancing of requests across a set of them. After receiving a query, a GWS machine coordinates the query execution and formats the results into HTML response to the user’s browser. Query execution consists of two major phases.

In the first phase, the index servers consult an inverted index that maps each query word to a matching list of documents (the hit list). The index servers then determine a set of relevant documents by intersecting the hit lists of the individual query words, and they compute a relevance score for each document.

This relevance score determines the order of results on the output page. The search process is challenging because of the large amount of data: The raw documents comprise several tens of terabytes of uncompressed data, and the inverted index resulting from this raw data is itself many terabytes of data. Fortunately, the search is highly parallelizable by dividing the index into pieces (Index shards), each having a randomly chosen subset of documents from the full index. A pool of machines serves requests for each shard, and the overall index cluster contains one pool for each shard. Each request chooses a machine within a pool using an intermediate load balancer which means - each query goes to one machine (or a subset of machines) assigned to each shard. If a shard’s replica goes down, the load balancer will avoid using it for queries, and other components of our cluster management system will try to revive it or eventually replace it with another machine.

During the downtime, the system capacity is reduced in proportion to the total fraction of capacity that this machine represented. However, service remains uninterrupted, and all parts of the index remain available. The final result of this first phase of query execution is an ordered list of document identifiers (docids). The second phase involves taking this list of docids and computing the actual title and uniform resource locator of these documents, along with a query-specific document summary.

Document servers (docservers) handle this job, fetching each document from disk to extract the title and the keyword-in-context snippet. As with the index lookup phase, the strategy is to partition the processing of all documents by randomly distributing documents into smaller shards, having multiple server replicas responsible for handling each shard, and routing requests through a load balancer.

It would be worthwhile at this moment to refer some of the research papers on different aspects of this tehnology,

Luiz Barroso, Jeffrey Dean and Urs Hoelzle

Fay Chang, Jeffrey Dean, Sanjay Ghemawat, Wilson C. Hsieh, Deborah A. Wallach, Mike

Burrows, Tushar Chandra, Andrew Fikes, and Robert E. Gruber

Sanjay Ghemawat, Howard Gobioff, and Shun Tak-Leung

Mike Burrows

Chapter from the book 'The Google Legacy'(PDF - right click and save target)Reference : Article on Quazen Web on 'Google Cluster Technology' and Google labs (http://labs.google.com) for Research Papers on Google Technology

No comments:

Post a Comment