This project aims at making use of the computational infrastructure of the IIITM-K especially the high-end servers like Linux and Solaris.As IIITM-K has a 24x7 datacenter facilities and high bandwith availability, this portal has found it to be a good choice to host it there.Moreover, the project here are strongly based on service-oriented principles and ready availability.

Figure 1 : A typical HPC Stack

IIITMK has a coherent Servers Farm comprised of seven high-end servers and a CD-stack server with total capacity approaching a terabyte. It provides a wide variety of Intranet and Web space services. This server farm is accessible from anywhere facilitated by policy-based access mechanisms. Most of the services are accessible through 'My Desktop' and the dot NET Enterprise Servers and Linux Servers.

Let me chalk out the computational resources employed in this portal under IIITM-K.The Computational Chemistry Portal,its associated services like Blog, the analytical chemistry portal,etc are hosted under high-speed SUN Solaris servers which make the web part.The backend of this portal is a set of High-performance Cluster(HPC) machines which provide aggregate computational speed of 5 individual machines.

Apart from this, there are the high-performance machines for separate computations which are operated through console or shell.There are also many open source visualization and analytical tools or softwares as part of the project and easily downloadable from the portal.The portal conducts workshops, hand-on labs, etc from time to time and undertake research projects, training ,etc side-by-side as its activities.

Computational Resources : Statistics

- Solaris Webserver (SUNFire v120) with Storedge Storage Server(in Terabytes) in cluster

- Computations Server with 2.4 GHz and 2GB RAM for shell computations

- Computational Cluster(HPC) with 5 nodes and having each 2 GHz processor and 256 MB RAM fully operational with WebMO for web-enabled computations

- Independant workstations for users with 2.4 GHz and Windows preinstalled

- Computational Packages like: GAMESS,NWChem,WebMO(licensed),QMView,Molden,Ghemical,gromacs,dgbas and lots of other open source and free softwares for Structure Computations,Molecular Dynamic Computations and Visualizations.

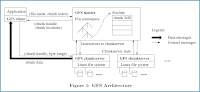

Overview of the Cluster

Figure 2: Cluster Schematic Diagram

We are having a Beowulf Cluster for Computational chemistry.It has 5 nodes in total - A head node and 4 compute nodes which assist in the partitioning of the work.We have some parallel jobs in computational chemistry submitted to the machine. The cluster makes the computations 30 times faster than the computation in a single machine with greater RAM and processor speed. In fact, it consists of 4 machines with Intel 2.93*2(dual core) processors and 512 ram each. The NFS mount has greatly reduced RAM consumption acting as swap. Also,it is available for the 4 parallely connected system.Also we are planning for using SUN grid engine integrated to the cluster to provide grid services.

Cluster software’s

The following software’s are installed:

1. GAMESS in Parallel

2. NWChem in Parallel

3. Tinker in sequential

4. WebMO on Parallel installed in head node.

The applications are installed in /export/apps/

(Only root has access to modify the apps directories and files)

WebMO is yet to be programmed and configured for automatic host detection.Currently GAMESS and NWChem are hard coded for parallel execution.Cluster Front-end Webpage can be accessed at : http://192.168.1.12 ,locally

Cluster Configuration

Compute clusters – cluster.iiitmk.ac.in (Head node)

Compute-0-0.local

Compute-0-1.local

Compute-0-2.local

Compute-pvfs-0 (Compute nodes)

Compulsory services on nodes:

nfs, sshd, postfix, gmond, nfslock, network, gmetad, iptables, mysqld, httpd

Partitioning scheme

/ Label =/ 5.8GB(/dev/hda1)

/state/partition /state/partition 1 67GB

swap 1GB

'/state/partition1' is mounted on ‘/export’ on the head node and /export is exported to other nodes via NFS. Whenever you create a user its home directory is created on “/export/home/username”. When user logs in, it is mounted on /home/username.

On compute nodes, a cluster. local:/export/home/username is mounted as /home/chemistry. This is available on each node.Applications that are compiled custom are placed in ‘/export/apps’ on the head node and exported as /share/apps on compute nodes.

deMon,dgbas,fftw,g03,gamess,gromacs,gv,NWChem,tinker

A directory called ‘scr’ is created on /state/partition and permissions are changed to user chemistry as owner. This /scr partition is not shared (Only the head node partition, /state/partition is shared).

You can execute same command on all nodes by issuing simply once in the head node in this manner:

# cluster-fork “command name”

e.g.: # cluster-fork “df –h”

(Next - Backup Scheme of Computational Clusters)